- Stage of development

-

TR =6-7

- Intellectual property

-

European priority patent application filed

- Intended collaboration

-

Licensing and/or co-development

- Contact

-

Antonio JiménezVice-presidency for Innovation and Transfera.jimenez.escrig@csic.escomercializacion@csic.es

- Reference

-

CSIC/AJ/066

Additional information

#Agriculture, livestock and marine science

#Agrotechnology

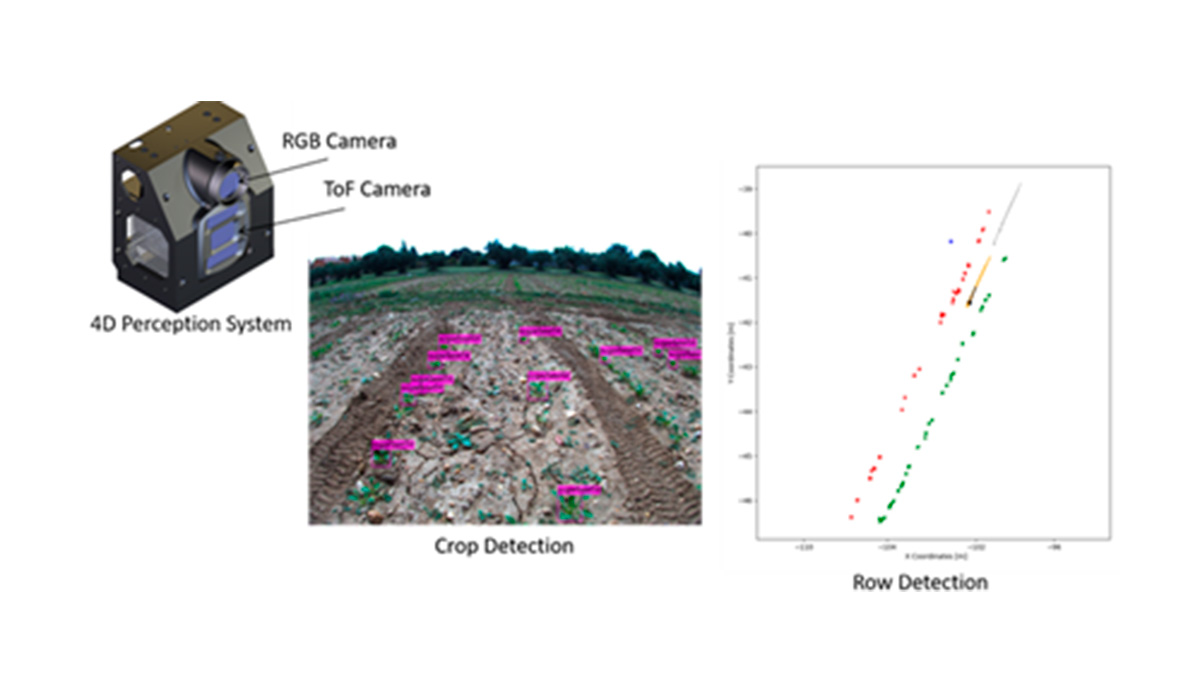

Method and system for improved guiding of robots in crop fields

4D perception system and a procedure for guiding autonomous robots in agricultural tasks by following rows of crops.

- Market need

-

Autonomous robots in a field are typically guided using Global Navigation Satellite Systems (GNSS), which have become crucial technologies for navigation, positioning with centimetre accuracy thanks to Real-Time kinematics technology, and timing applications. Furthermore, for using an autonomous vehicle on a row crop, the vehicle’s controller needs to know the position of every row with respect to the robot’s position to prevent stepping on and, therefore, destroying them.

Alternatives to GNSS would be beneficial for navigation in row crops, such as perception systems based on computing vision.

- Proposed solution

-

An absolute reference frame (ARF) with the origin attached to the robot is defined with an orthogonal reference frame.

- The soil plane is identified with respect to the ARF using a system based on machine vision

- A range imaging device, a Time of Flight camera, and an optional system for measuring distances to the subject are placed onboard the robot. This device provides the system with a third dimension (3D) information.

- Competitive advantages

-

- The proposed solution enables the use of mobile autonomous robots in crop fields, without requiring excessive modification of the work and without relying on precise maps navigation

- It is useful and successful even on the early growth stage of crop.